I have noticed that lemmy so far does not have a lot of fake accounts from bots and AI slop at least from what I can tell. I am wondering how the heck do we keep this community free of that kind of stuff as continuous waves of redditors land here and the platform grows.

EDIT a potential solution:

I have an idea where people can flag a post or a user as a bot and if it’s found out to be a bot the moderators could have some tool where the bot is essentially shadow banned into an inbox that just gets dumped occasionally. I am thinking this because then people creating the bots might not realize their bot has been banned and try and create replacement bots. This could effectively reduce the amount of bots without bot creators realizing it or know if their bots have been blocked or not. The one thing that would also be needed is a way to request being un-bannned if they get hit as a false positive. these would have to be built into lemmy’s moderation tools and I don’t know if any of that exists currently.

The only true solution to this is cryptographically signed identities.

One method is identity verification tied to a public key, which can be done with claims aggregation (I am X on GitHub, and Y on LinkedIn, and Z on my national ID, etc), but this removes anonymous use.

Another is a central resource to verify a user’s key is a real human, where only one entity controls the identity verification. While this allows pseudo anonymous use, it also requires everyone to trust one individilual entity, and that has other risks.

We’ve been discussing this with FedID a lot, lately.

What I find as annoying than bots is real people copy/pasting their comments from ChatGPT prompts because they can’t be arsed to formulate/organize their own thoughts. It is just aggressively wasting both their and my time. Mindboggling.

Re: bots

If feasible, I think the best option would be an instance that functions similarly to how Reddit’s now defunct r/BotDefense operated and instances which want to filter out bots would federate with that. Essentially, if there is an account that is suspect of being a bot, users could submit that account to this bot defense server and an automated system would flag obvious bots whereas less obvious bots would have to be inspected manually by informed admins/mods of the server. This flagging would signal to the federated servers to ban these suspect/confirmed bot accounts. Edit 1: This instance would also be able to flag when a particular server is being overrun by bots and advise other servers to temporarily defederate.

If you are hosting a Lemmy instance, I suggest requiring new accounts to provide an email address and pass a captcha. I’m not informed enough with the security side of things to suggest more, but https://lemmy.world/c/selfhosted or the admins of large instances may be able to provide more insight for security.

Edit 2: If possible, an improved search function for Lemmy, or cross-media content in general, would be helpful. Since this medium still has a relatively small userbase, most bot and spam content is lifted from other sites. Being able to track where bots’ content is coming from is extremely helpful to conclude that there is no human curating their posts. This is why I’m wary of seemingly real users on Lemmy who do binge spam memes or other non-OC. Being able to search for a string of text, search for image sources/matching images, being able to search for strings of text within an image, and being able to find original texts that a bot has rephrased are on my wishlist.

Re: AI content

AFAIK, the best option is just to have instance/community rules against it if you’re concerned about it.

The best defense against both is education and critical examination of what you see online.

If you are hosting a Lemmy instance, I suggest requiring new accounts to provide an email address and pass a captcha.

The captchas are ridiculously ineffective and anyone can get dummy emails. Registration applications is the only way to go.

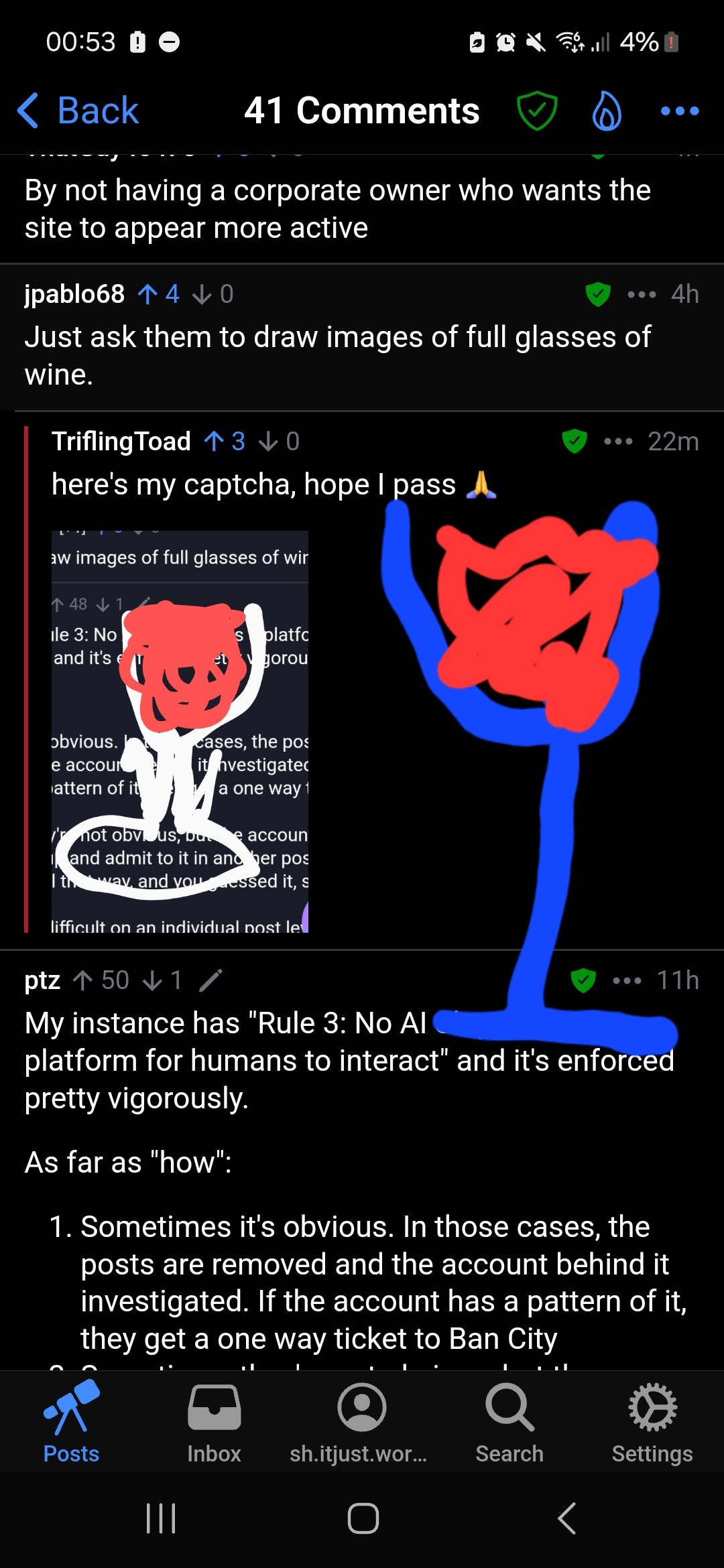

Plenty of websites filter out dummy email generators, could do the same in addition to applications. Making a drawing of something specific, but random (think of a list of a dozen or two images gen-ai gets wrong) could be a captcha replacement.

Just ask them to draw images of full glasses of wine.

here’s my captcha, hope I pass 🙏

Same

I’ve noticed that a lot of ai slop exists in dedicated instances, so the first answer is to just block those. Dunno of blocking whole instances also blocks cross-posts from said instance but it’s the first step in the journey of 1000 miles.

Bots tend to have flair stating they’re bots, which makes them easier to block. And since lemmy preaches open-source like gospel, you can probably write some optional code for specific lemmy clients that auto blocks that stuff for you

You can block properly flaired bots in your user settings.

While shadow banning is an option, it’s also a terrible idea because of how it will eventually get used.

Just look at how Reddit uses it today.

I am getting shadow banned constantly just by existing in a developing country. let’s not do this with Lemmy because otherwise I’m fucked

It would only succeed in filtering really low effort bots anyway, because it’s really easy to programmatically check if you are shadowbanned. Someone who is trying to ban evade professionally is going to be way better equipped to figure it out than normal users.

I don’t think there’s really a solution to this.

Everyone is so fixated on getting more users but honestly I don’t think that will make it a better experience.

Growth for growth’s sake is the destruction of many good things.

Keep Lemmy Obscure!

I kind of agree. It seems like there is some point at which it’s ideal and then after it grows to a certain size things become unhinged.

It would be nice to see some additional interests and communities.

To me it would be worth it for Lemmy to get somewhat Eternal September’d if it meant Reddit being destroyed/replaced with something that isn’t a company.

I respect your opinion, and can see some benefit to reddit’s demise, but I think I’m too cynical and jaded to hold that belief.

It looks like bluesky will be twitter’s replacement, and it’s not clear that bluesky will be better.

If reddit implodes there’s not really any likelihood that refugees will seek out lemmy.

That said, at least lemmy is self hostable and federated. If the larger lemmy network did shit itself there would be smaller instances which are not federated with the majority of other servers so potentially they might be somewhat sheltered from bots and trolls.

If reddit implodes there’s not really any likelihood that refugees will seek out lemmy.

Why not? Isn’t that the reason for the influxes of users that have happened so far?

Hmm, I don’t think the past is a good predictor of the future in this case.

Maybe everyone likely to leave reddit for lemmy already has?

With the influxes that have occurred in the past I think Lemmy has retained about a third of the MAUs contained in the spike. It’s not nothing but I think it really underlines my point that Lemmy just isn’t a viable alternative for a lot of reddit users. The network effect might be responsible for some of that, but not all.

Also, as time goes by there are more corporate backed alternatives, like threads.

By not having a corporate owner who wants the site to appear more active

Keeping bots and AI-generated content off Lemmy (an open-source, federated social media platform) can be a challenge, but here are some effective strategies:

-

Enable CAPTCHA Verification: Require users to solve CAPTCHAs during account creation and posting. This helps filter out basic bots.

-

User Verification: Consider account age or karma-based posting restrictions. New users could be limited until they engage authentically.

-

Moderation Tools: Use Lemmy’s moderation features to block and report suspicious users. Regularly update blocklists.

-

Rate Limiting & Throttling: Limit post and comment frequency for new or unverified users. This makes spammy behavior harder.

-

AI Detection Tools: Implement tools that analyze post content for AI-generated patterns. Some models can flag or reject obvious bot posts.

-

Community Guidelines & Reporting: Establish clear rules against AI spam and encourage users to report suspicious content.

-

Manual Approvals: For smaller communities, manually approving new members or first posts can be effective.

-

Federation Controls: Choose which instances to federate with. Blocking or limiting interactions with known spammy instances helps.

-

Machine Learning Models: Deploy spam-detection models that can analyze behavior and content patterns over time.

-

Regular Audits: Periodically review community activity for trends and emerging threats.

Do you run a Lemmy instance, or are you just looking to keep your community clean from AI-generated spam?

Lmao

I see what you did there

Yep, realized in the first sentence and had a laugh

-

What stops banned people from creating a new account and continuing?

Little at this point. That’s bound to change eventually. Just ask Nicole from Toronto.

I smoked dabs with Nicole from Toronto

Did you bang?

Not yet she says soon though. She needed a few bucks for cat food I gave her some gift cards . She’s cool man

In short: you don’t.

Shadow ban doesn’t do anything because the people running the bot could just create a script to check if the comments is visible from another account (or logged out). And if it isn’t visible, they’ll know there’s a shadowban.

I don’t think it really answers your question. But I have been blocking every AI comm that comes up on my feed. Except for c/fuckai

Same. The less interaction they get, the better.

Sometimes I also downvote before doing so. I once saw some posters cry about that and it tickled my funny bone.

Cunningham’s law helps. You can make a stand-alone website that’s slop and hope an individual user doesn’t notice the hallucinations, but on Lemmy people can reply and someone’s going to raise the alarm.

I hate memes and images so I don’t look at any of them on this platform so I don’t know what you’re talking about. You’re welcome